Precision is everything in industrial automation, from meeting strict manufacturing tolerances to ensuring accurate custody transfers in the Oil & Gas sector. These high-stakes environments demand technologies that are not only powerful but also trustworthy. That is why the accuracy and reliability of artificial intelligence (AI), particularly large language models (LLMs) like generative AI and chatbots, are critical.

AI hallucinations, when systems confidently generate false or misleading information, pose a real risk.

In 2023, Google’s Bard incorrectly claimed the James Webb Space Telescope had taken the first photo of an exoplanet, a milestone actually achieved in 2004. The factual error, flagged by experts, contributed to a $100 billion drop in Alphabet’s market value.

In another case, two New York lawyers relied on ChatGPT to draft a legal brief. The AI generated convincing but completely fabricated case citations. The result: a $5,000 court fine and serious damage to their professional credibility.

Even Microsoft’s AI-powered Bing shocked users with erratic behavior, ranging from professing love to making bizarre threats, raising alarms about deploying generative AI in public tools.

Google’s AI overview once falsely claimed that Apollo 11 astronauts played with cats on the moon, even inventing quotes from Neil Armstrong to support the story.

In one academic setting, a professor’s request for article references generated by ChatGPT led a university librarian to discover that none of the sources actually existed. According to a study called “High Rates of Fabricated and Inaccurate References in ChatGPT-Generated Medical Content,” they found that out of the 115 references for 30 short medical papers, 47% of the references were fabricated, 46% were authentic but inaccurate, and only 7% were authentic and accurate.

These stories may sound amusing, but the consequences are not. In fields like industrial automation, where safety, quality, and compliance are non-negotiable, trust in AI must be earned and maintained. Without it, the promise of intelligent automation quickly becomes a liability.

Why Do These Unfortunate Situations Occur?

Human Reasoning

When people are asked a question, they typically reason through it logically. We draw on prior knowledge, connect facts, and make judgments to arrive at an informed answer.

How LLMs Work

Large language models (LLMs), on the other hand, do not “reason” in the human sense. Instead, they generate responses by predicting the next word in a sequence based on patterns in the data they were trained on. They are excellent mimics, which makes their output sound highly convincing; however, it is essential to remember that the underlying process is fundamentally different from human reasoning. The appearance of understanding is not the same as proper comprehension.

Common Causes of Hallucinations in LLMs

Understanding the root causes of hallucinations is critical when deploying LLMs in high-stakes environments such as industrial automation, where precision, reliability, and safety are paramount.

Deficiencies in Training Data

LLMs are trained on vast datasets, and their outputs reflect the quality of that data. If the training data contains outdated information, factual inaccuracies, biases, or omissions, the model is likely to reproduce those issues in its responses. As the saying goes, “Garbage in, garbage out.”

Limited Contextual Awareness

While LLMs are capable of managing short-term context within a defined token window (the amount of input data, measured in tokens, that the model can process at once), they often struggle to maintain coherence over extended interactions. They also lack a true understanding of context or intent and are not equipped with inherent common-sense reasoning, which can result in misinterpretations or off-topic responses.

Statistical Nature of Generation

LLMs generate language by predicting the most statistically probable next word based on learned patterns, not by verifying facts or applying logic. This statistical approach can produce fluent and persuasive text that may still contain factual inaccuracies or misleading information.

Overgeneration and Artificial Creativity

LLMs are designed to produce novel and human-like text. While this is beneficial for many applications, it can lead to the creation of fabricated or fictional content that appears credible. In technical domain environments, such hallucinations can lead to serious operational or safety concerns if not adequately mitigated.

LLMs have no built-in mechanism to determine whether they are hallucinating. Unlike humans, who verify information by cross-referencing structured, trusted, and factual sources, LLMs lack the ability to fact-check or validate their outputs. They are not designed to assess truthfulness, only to generate plausible-sounding text based on learned patterns. Simply put, there is no internal reference point for the model to confirm whether the information it provides is accurate.

How Can LLM Hallucinations Be Reduced?

Reducing hallucinations in LLMs requires more than just high-quality training data. It involves advanced strategies that enhance reliability and factual accuracy through structured knowledge integration and intelligent system design. One approach is the implementation of Hybrid AI.

What Is Hybrid AI?

Hybrid AI combines the strengths of different AI methodologies, particularly rule-based systems (symbolic AI) and machine learning (ML). This approach offers a balanced solution: rule-based systems ensure consistency and logical correctness, while machine learning provides adaptability and data-driven insights.

Why Hybrid AI Helps Reduce Hallucinations

LLMs generate responses based on statistical predictions, not fact-checking. They cannot verify their own output. Hybrid AI addresses this limitation in the following ways:

Rule-Based Systems as Guardrails

Rule-based systems operate using predefined logic. They enforce constraints that prevent the model from producing unrealistic or factually incorrect content. This is particularly important in regulated or safety-critical environments such as industrial automation, where hallucinations can lead to costly or dangerous errors.

Machine Learning for Flexibility and Pattern Recognition

ML models are capable of learning from large volumes of data to identify trends, patterns, and anomalies. However, their outputs are only as good as the data they are trained on. When used alone, they may produce hallucinations if trained on biased or flawed information.

Blending Logic with Adaptability

By combining rule-based precision with ML adaptability, Hybrid AI creates systems that are both reliable and flexible. For example, in predictive maintenance, machine learning can detect early signs of equipment failure through sensor data, while rule-based logic ensures that any recommended actions comply with safety protocols and operational constraints.

This division of labor reduces the chance of false positives or hallucinated defect detection, improving both accuracy and efficiency.

Unlocking Intelligence: ADISRA’s Built-In Rule-Based Expert System

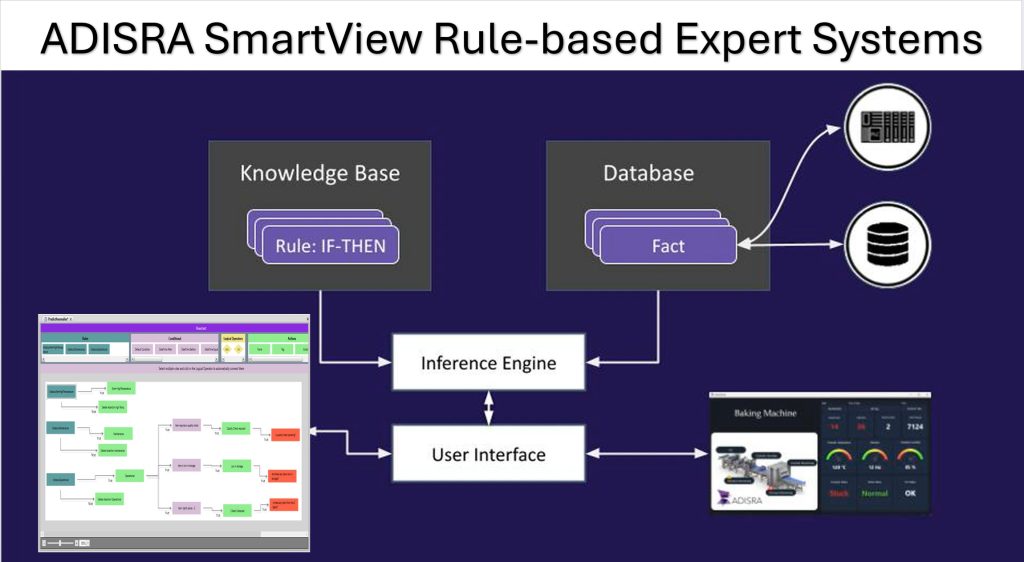

ADISRA SmartView includes a built-in rule-based expert system as part of its core functionality. This system blends facts (hard data) and heuristics (rules of thumb or experience-based logic) to encapsulate specialized domain knowledge. Once configured, it can autonomously solve complex industrial automation problems by offering insights, making decisions, and triggering actions.

What Is a Rule-Based Expert System?

At its core, a rule-based expert system is a type of artificial intelligence that uses a series of “if-then” rules to simulate the decision-making ability of a human expert. It applies predefined logic to input data to recommend actions or automatically respond to events.

Key Components of a Rule-Based System Architecture

– Knowledge Base

Stores the set of defined rules and domain-specific logic.

– Inference Engine

The system’s reasoning core evaluates real-time data against the knowledge base to reach conclusions or take actions.

– Input/Output Interface

Input: Gathers real-time data from sensors or external systems.

Output: Executes decisions such as displaying alerts or controlling equipment.

Real-World Applications in HMI/SCADA with a Rule-Based Expert System

Rule-based expert systems embedded within ADISRA SmartView enable industrial processes to make intelligent decisions. Here are some examples:

– Temperature Monitoring

Detects when equipment exceeds safe temperature levels.

Example: Shuts down a machine and alerts operators to prevent damage.

– Automated Production Line Control

Adjusts machine parameters to ensure smooth operation.

Example: Slows down conveyor belts if a bottleneck is detected.

– Regulatory Compliance

Continuously monitors for adherence to safety and environmental standards.

Example: Triggers an alert when emissions exceed regulatory thresholds.

– Energy Efficiency Optimization

Modulates power usage based on demand.

Example: Powers down idle equipment during off-peak hours.

– Predictive Maintenance

Anticipates equipment failures by identifying early warning signs.

Example: Issues a notification when abnormal vibration indicates bearing wear.

You can read more about how ADISRA SmartView supports predictive maintenance in this earlier blog post here.

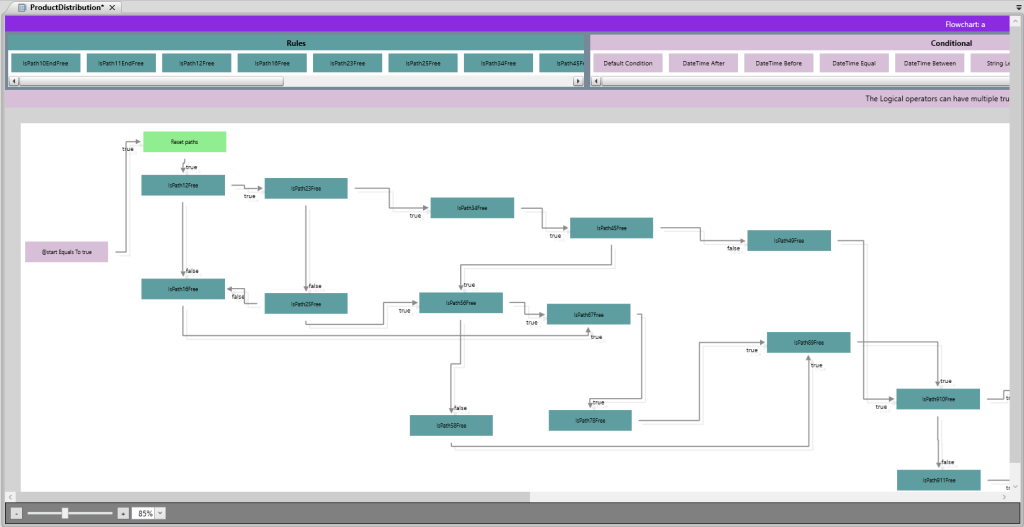

Visual Rule Design and Execution in ADISRA SmartView

ADISRA SmartView allows users to execute rules based on tag values, event triggers, or time-based intervals. Users can choose between a traditional logic editor or a visual block diagram interface, making it easy to model logic flows and automate tasks, such as triggering a sequence when a tank reaches a critical level.

Other features include:

– Logging events and goals

– Storing history in SQL databases

– Dynamically change the facts and assertions at runtime

Hybrid AI: Combining Rule-Based Logic with Machine Learning

By integrating machine learning with ADISRA SmartView’s rule-based system, you gain the best of both worlds. Rule-based logic provides reliability and explainability, while machine learning adapts to complex scenarios and learns from data.

This Hybrid AI approach:

– Reduces hallucinations by enforcing factual accuracy

– Enables smarter automation through continuous learning

– Supports applications like predictive maintenance, quality control, robotics, and energy optimization

ADISRA engineers have already demonstrated a successful proof of concept integrating machine learning for vision systems with ADISRA SmartView. (Read that blog here.)

Conclusion:

In industrial environments where trust, precision, and safety are paramount, AI technologies must rise to meet those expectations. While generative AI offers powerful capabilities, it also brings risks, especially when hallucinations can cause real-world harm. By adopting a Hybrid AI approach, combining machine learning with rule-based systems like those built into ADISRA SmartView, organizations can significantly reduce these risks. This fusion of flexibility and logic offers a smarter, safer path to automation.

Start building intelligent industrial automation solutions today with ADISRA SmartView; download it here.

Have a project in mind? Let us talk. Click this link to request a personalized demonstration with our team.

Curious about how ADISRA’s built-in rule-based expert system can elevate your automation strategy?

Join us for our upcoming webinar on June 24th at 9:30 AM CDT to explore how the built-in rule-based expert system in ADISRA SmartView transforms alarm management, diagnostics, and decision-making on the plant floor.

Webinar Title:

From Rules to Results: Harnessing the Power of Rule-Based Expert Systems in ADISRA SmartView

Discover how to transition from reactive operations to proactive performance with intelligent automation tools.

Register for the webinar here.

ADISRA®, ADISRA’s logo, InsightView®, and KnowledgeView® are registered trademarks of ADISRA, LLC.

Copyright

© 2025 ADISRA, LLC. All Rights Reserved.